Audits are hard, but when you partner with a firm like KirkpatrickPrice, it will be worth it.

That’s exactly what Softdocs learned when they asked us to be their partner on their newest compliance initiative: become compliant with the NIST 800-53 framework via a StateRAMP audit within one year. Learn exactly how we worked together to make sure this audit journey ended in success.

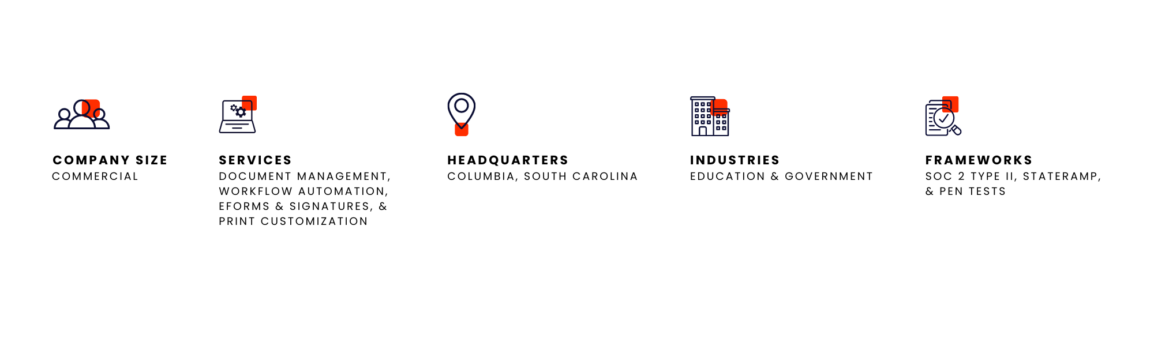

About Softdocs

Softdocs provides process automation and document management solutions to schools, states, counties, and cities. Their solutions enable colleges, universities, K-12 school districts, and state and municipal governments to improve how they serve people, create new efficiencies, and enable the future of work.

Challenge

Comply with NIST 800-53 in one year.

Solution

A customized roadmap to compliance.

Outcome

More clients and more secure data.

The Details

Implement and Comply with a Brand-New Framework in Under a Year

Softdocs wanted to increase their cybersecurity posture. They are always looking for ways to level-up their security program because keeping their client’s data safe is their number one priority.

They set an aggressive goal to create a more secure cloud infrastructure that is compliant with the NIST 800-53 framework via a StateRAMP audit within one year.

Partner with Experts to Create a Custom Roadmap to Success

Softdocs started looking towards NIST compliance in Sept. 2022, beginning with a “self” gap analysis. During the process, the Director of Operations and Compliance Officer, Terri McKinney noted that, “It’s too hard to do alone; you need guidance.” She said that after working through StateRAMP’s 320 controls alone, she knew that Softdocs needed KirkpatrickPrice to partner with them to provide expertise and guidance as they began this challenging compliance journey.

Softdocs began working with KirkpatrickPrice in 2018 on a SOC 2 Type II project. They’ve had successful audits for 5 years in a row and were able to build a great foundation with their auditor, Herbert’s, guidance.

So, when it came time for their NIST 500-83 project, Terri knew that she wanted to partner with us.

“We selected KirkpatrickPrice because we don’t feel like a number with them; it’s truly a partnership. Our audit gives us expert advice on what we need to do to increase our level of security.”

Together, we determined the scope of the project and set 4 milestones:

1. Asset-Based Risk Assessment

Softdocs’ auditor, Herbert, flew to their office in Columbia, South Carolina to work with them on an asset-based risk assessment. Together, they determined that Softdocs’ cloud environment needed to be measured against the NIST controls. The risk assessment gave the team the assurance that they were applying the controls correctly and that the project would be focused on the right areas of Softdocs’ program.

2. Gap analysis

There are 320 controls for StateRAMP, and Herbert, Terri, and the Softdocs security team went through all of them in one week to identify any gaps in the Softdocs program. They compared Softdocs’ current security program to all 320 controls to identify any areas they needed to improve.

3. Remediation

Because of the great foundational work Softdocs has done with KirkpatrickPrice over the last five years, they luckily had all the big things in place. The gap analysis highlighted some areas they could improve with small changes and prepared them to face the audit with confidence. Herbert guided them through this process, so they were sure that their controls were properly designed.

“Herbert did a great job interpreting controls and then helping us implement them into our environment. He was the guiding force determining how each control related to our specific environment.”

4. The Audit

Their audit started December 2023 and concluded in April 2024, meaning Softdocs met their goal of completing the audit by 2024! The audit followed KirkpatrickPrice’s consultative audit approach, ensuring Softdocs was never alone during their audit.

Lead Practitioner Herbert McMorris and Client Success Manager Emily Buser partnered with Softdocs during their entire audit process. They organized the project, ensured they stayed on track, and provided a safe space for the team to candidly work through the challenging portions of the audit.

Though the audit was one of the largest challenges Softdocs has faced, Terri said it was 100% worth it. By setting such an aggressive goal, Softdocs almost “forced their own hand,” Terri said. There are always ways to improve, but it’s tough to do because “it’s way easier to drag your feet than level-up your entire security program.” This audit deadline made them commit to the process. It took every person in the organization to buy in to the process for it to be successful, but it was absolutely worth it. They knew it wasn’t going to be easy, and it didn’t disappoint. They did the hard work and are reaping the rewards.

“You can’t do it without a team, and KP has delivered.”

Win New Business by Leveling Up Your Security Program

After successfully completing their StateRAMP audit, Softdocs has proven how seriously they take security and why that is so critical to their success.

”We are dedicated to implementing the processes necessary to hyperfocus on security all year long, not just during an audit.”

They have also been able to win new business with their NIST compliance, claiming it is the differentiator between them and their competitors.

Softdocs understands the importance of security to the success of their business. They know it makes a difference in the quality of work they can provide to their customers, and in the types of customers they attract. We’re proud to be their partner in compliance and look forward to working with them in the future to continuously elevate their security practices.

“Orgs should embrace the work that an auditing firm brings to the table. Wouldn’t you much rather your auditor find a problem than an evil actor looking for a hole that you haven’t plugged? That to me is the most important thing.”

Level up your security program with KirkpatrickPrice.

Audits are hard, but when you work with an expert who’s been in your shoes, it will always be worth it. KirkpatrickPrice will be your partner in compliance so you can be confident that your cybersecurity and compliance audit will end in success.

Connect with an expert today to learn what it’s like to have a true partner in compliance.

Together we can:

- Identify the audit frameworks and services that benefit your organization’s unique compliance needs.

- Schedule a demo of the Online Audit Manager.

- Make sure your company finds success on its compliance journey.